Pre / Post Designs

Pre / Post Designs

Pre / Post designs are common designs in the social sciences and beyond. These types of designs are such that two measurement occasions are collected for each person in the study. Typically, the pre-measurement occasion occurs before a treatment and then the post-measurement occasions occurs after the treatment has happened. This gives a glimpse to whether the treatment impacts the a specific outcome.

Below are a few scenarios that could be studied with a pre / post design.

- Does a new drug reduce blood pressure in those with high blood pressure?

- Does a yoga regimine reduce pain in those that have fibromyalgia?

- Does professional development increase knowledge related to acceleration options for students?

- Does a finance workshop improve financial health and wealth of individuals?

Each of these could be conducted so that two measurement occasions could be used. First, the measurement before the treatment would be collected, then the treatment is given, then the post-measurement could explore if the treatment is effective. This is a type of within study design that is popular because each individual acts as its own control group. They are also easier to implement than longitudinal studies that would collect more than 2 measurement occasions.

Analyzing Pre / Post Designs

There are more than one way to analyze pre/post designs. These are largely derived from the research questions and also a statistical consideration related to balance. Let’s first think about the research questions.

There first class of research questions talk about change. For example, using the scenarios above, how much does the drug lower blood pressure or how much does the financial workshop improve financial health?

The second class of research questions talk about what is the average post score after adjusting for pre scores. This is inherently a conditional mean problem whereas the previous example is an unconditional problem. For example, using the scenarios above, do those with the same initial blood pressure have different blood pressure levels at follow-up?

Example

We are going to use simulated data based on a real study. The study is:

Kathryn Curtis, Anna Osadchuk & Joel Katz (2011) An eight-week yoga intervention is associated with improvements in pain, psychological functioning and mindfulness, and changes in cortisol levels in women with fibromyalgia, Journal of Pain Research, , 189-201, DOI: 10.2147/JPR.S22761

For this analysis we are going to focus on pain as a single outcome. The study itself had many different outcomes that they explored the impact of the 8-week yoga intervention. I’m also simplifying the study’s actual design (they collected survey measurements at 3 time points). I am going to focus the data simulation from a self-report measure of 11 items named the Pain Disability Index. This measure quantifies the extent to which an individual’s pain impacts seven daily activities.

Table 3 of the paper contains the means and standard deviations for the outcome that we will use for the simulation. Most notably, the mean PDI score for the group before the yoga intervention was 38.14 (SD was 17.17) and follow up was 33.81 (14.43). This resulted in a reductino in the self-report measure of pain of 4.33.

library(tidyverse)## ── Attaching core tidyverse packages ──────────────────────── tidyverse 2.0.0 ──

## ✔ dplyr 1.1.4 ✔ readr 2.1.5

## ✔ forcats 1.0.0 ✔ stringr 1.5.1

## ✔ ggplot2 3.4.4 ✔ tibble 3.2.1

## ✔ lubridate 1.9.3 ✔ tidyr 1.3.1

## ✔ purrr 1.0.2

## ── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

## ✖ dplyr::filter() masks stats::filter()

## ✖ dplyr::lag() masks stats::lag()

## ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errorslibrary(ggformula)## Loading required package: scales

##

## Attaching package: 'scales'

##

## The following object is masked from 'package:purrr':

##

## discard

##

## The following object is masked from 'package:readr':

##

## col_factor

##

## Loading required package: ggridges

##

## New to ggformula? Try the tutorials:

## learnr::run_tutorial("introduction", package = "ggformula")

## learnr::run_tutorial("refining", package = "ggformula")library(mosaic)## Registered S3 method overwritten by 'mosaic':

## method from

## fortify.SpatialPolygonsDataFrame ggplot2

##

## The 'mosaic' package masks several functions from core packages in order to add

## additional features. The original behavior of these functions should not be affected by this.

##

## Attaching package: 'mosaic'

##

## The following object is masked from 'package:Matrix':

##

## mean

##

## The following object is masked from 'package:scales':

##

## rescale

##

## The following objects are masked from 'package:dplyr':

##

## count, do, tally

##

## The following object is masked from 'package:purrr':

##

## cross

##

## The following object is masked from 'package:ggplot2':

##

## stat

##

## The following objects are masked from 'package:stats':

##

## binom.test, cor, cor.test, cov, fivenum, IQR, median, prop.test,

## quantile, sd, t.test, var

##

## The following objects are masked from 'package:base':

##

## max, mean, min, prod, range, sample, sumlibrary(simglm)

theme_set(theme_bw(base_size = 16))

sim_args <- list(

formula = PDI ~ PDI_pre,

fixed = list(

PDI_pre = list(var_type = 'continuous',

mean = 0, sd = 17.17, floor = -38.14, ceiling = 31.86)

),

error = list(variance = 150),

sample_size = 50,

reg_weights = c(-4.33, -0.5)

)

pain_data <- simulate_fixed(data = NULL, sim_args) |>

simulate_error(sim_args) |>

generate_response(sim_args)

head(pain_data)## X.Intercept. PDI_pre level1_id error fixed_outcome random_effects

## 1 1 -1.993226 1 -16.4390105 -3.333387 0

## 2 1 -22.808193 2 -12.2931615 7.074097 0

## 3 1 18.659121 3 -6.5547319 -13.659561 0

## 4 1 -2.712311 4 0.1004026 -2.973845 0

## 5 1 0.833026 5 1.0588527 -4.746513 0

## 6 1 3.483902 6 28.9741467 -6.071951 0

## PDI

## 1 -19.772398

## 2 -5.219065

## 3 -20.214293

## 4 -2.873442

## 5 -3.687660

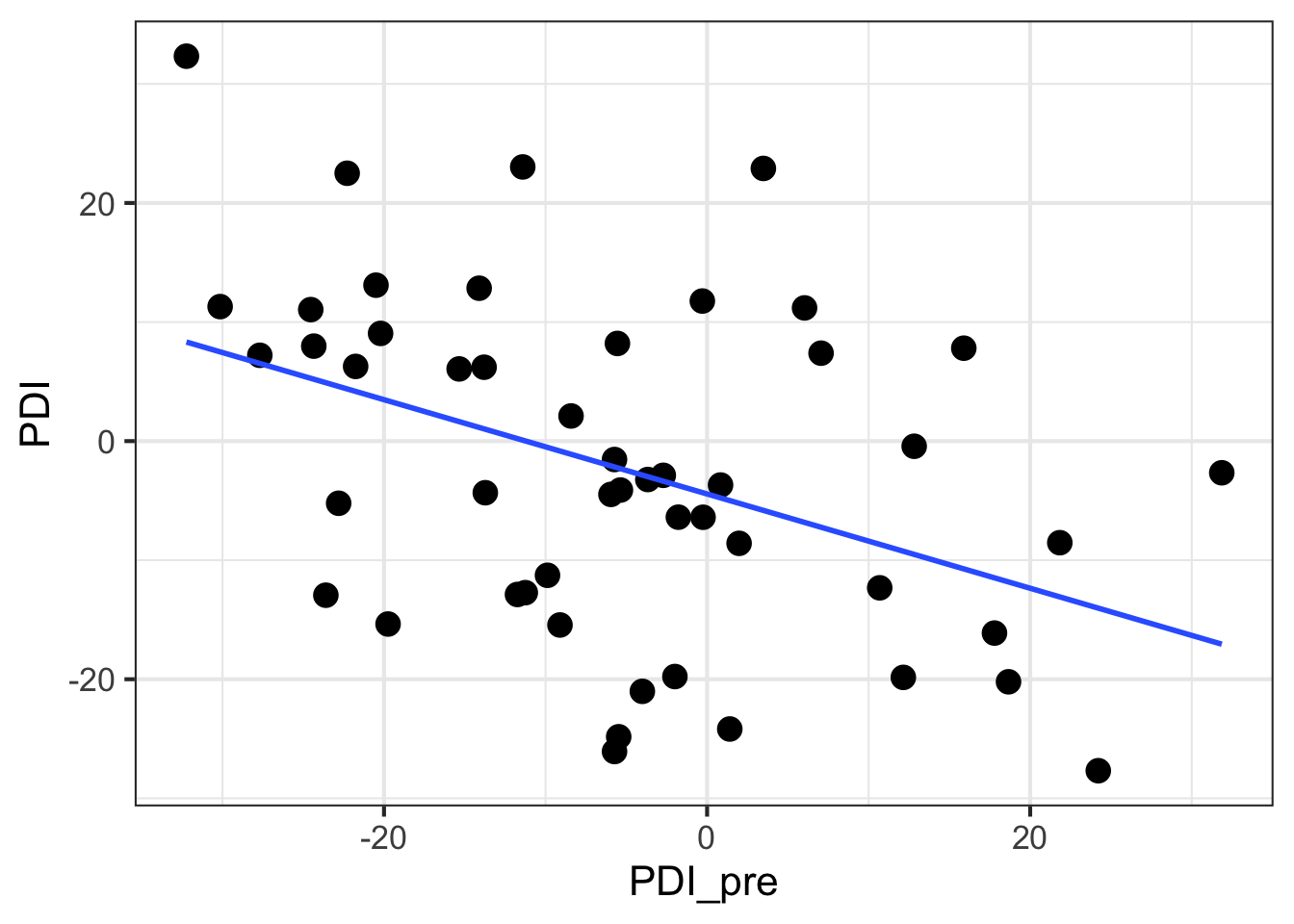

## 6 22.902196gf_point(PDI ~ PDI_pre, data = pain_data, size = 4) |>

gf_smooth(method = 'lm')

df_stats(~ PDI, data = pain_data, mean, sd, min, max)## response mean sd min max

## 1 PDI -2.297171 14.16733 -27.68183 32.32932df_stats(~ PDI_pre, data = pain_data, mean, sd, min, max)## response mean sd min max

## 1 PDI_pre -5.409555 14.93702 -32.23126 31.86cor(PDI ~ PDI_pre, data = pain_data)## [,1]

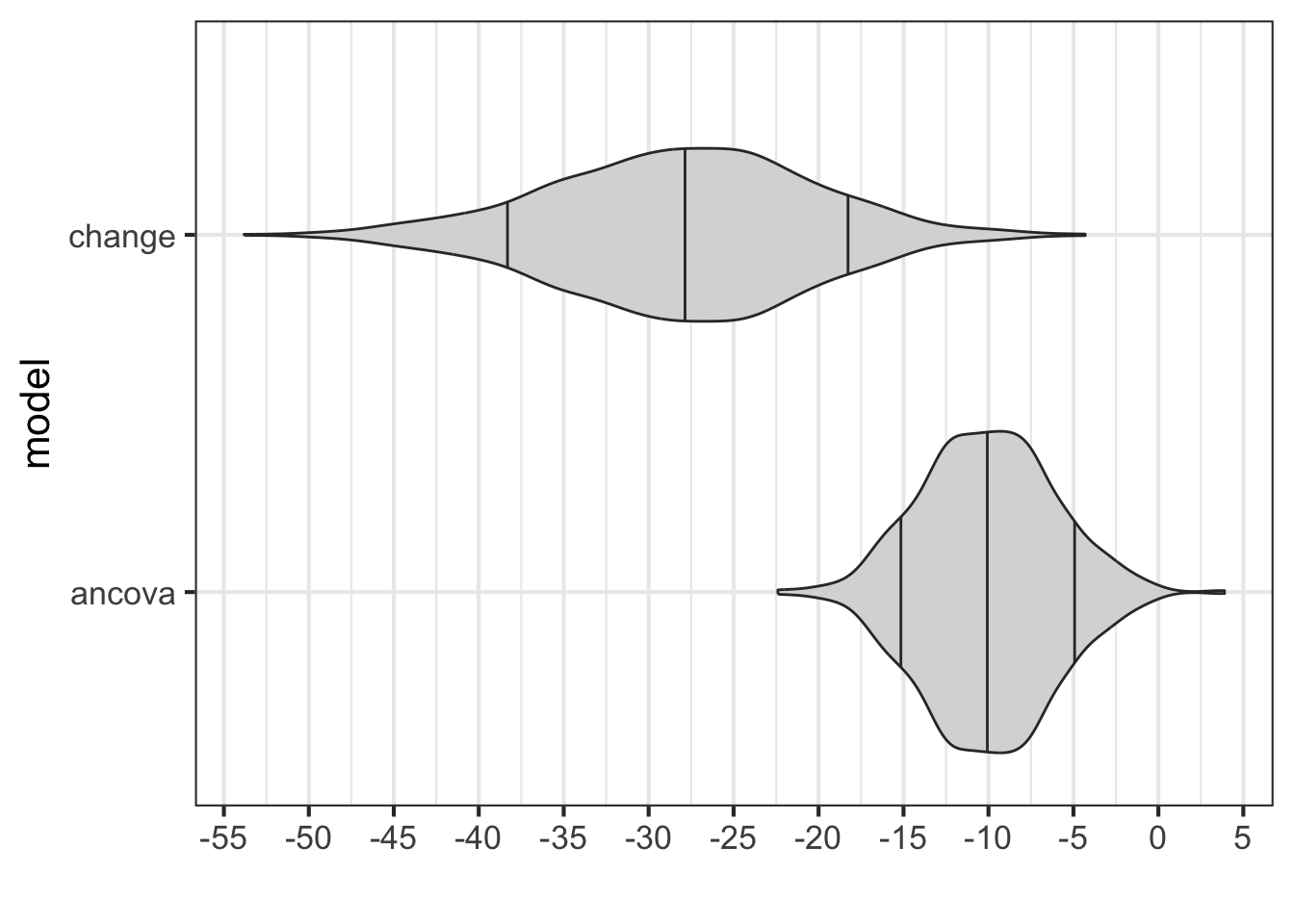

## [1,] -0.4174525Two competing models

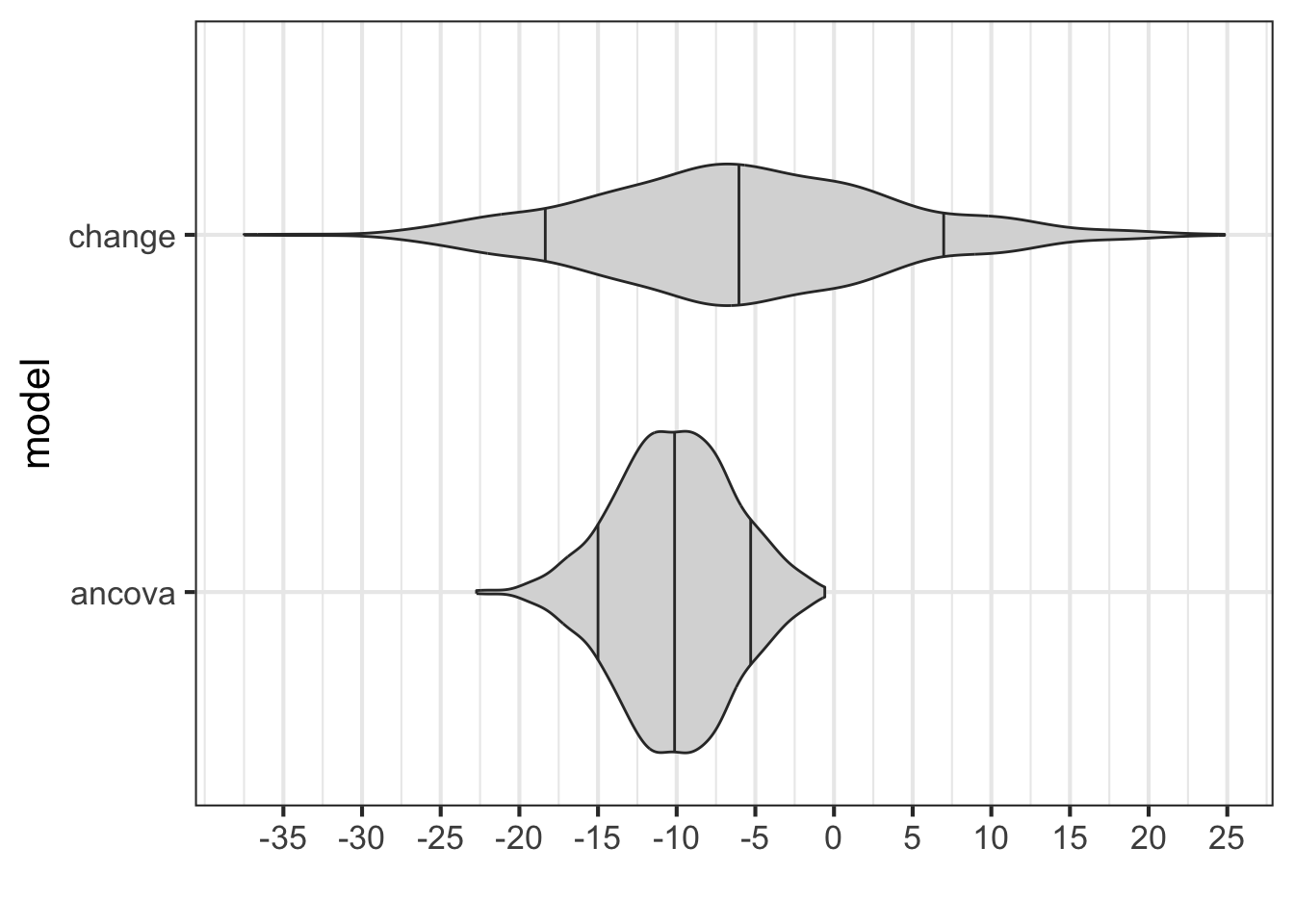

There are two competing models here that are often described in the literature. As discussed above, one is performed on the change scores, the other is done via statistical control. These two models are shown below for the simplest design with pre / post measurement occasions for a single group.

Change Scores

\[ Post - Pre = \beta_{0} + \epsilon \]

ANCOVA

\[ Post = \beta_{0} + \beta_{1} Pre + \epsilon \]

For both models, the term of interest is \(\beta_{0}\). This term would represent the average change score for the change score model and would represent the average post score after adjusting for the pre score respectively.

summary(

lm(I(PDI - PDI_pre) ~ 1, data = pain_data)

)##

## Call:

## lm(formula = I(PDI - PDI_pre) ~ 1, data = pain_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -55.010 -15.693 -2.263 16.750 61.448

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.112 3.466 0.898 0.374

##

## Residual standard error: 24.51 on 49 degrees of freedompain_data <- pain_data |>

mutate(change = PDI - PDI_pre)

t.test(pain_data$change)##

## One Sample t-test

##

## data: pain_data$change

## t = 0.89809, df = 49, p-value = 0.3735

## alternative hypothesis: true mean is not equal to 0

## 95 percent confidence interval:

## -3.851938 10.076706

## sample estimates:

## mean of x

## 3.112384summary(

lm(PDI ~ PDI_pre, data = pain_data)

)##

## Call:

## lm(formula = PDI ~ PDI_pre, data = pain_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -23.8998 -10.3989 0.5579 8.2536 28.7207

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -4.4390 1.9587 -2.266 0.02798 *

## PDI_pre -0.3959 0.1244 -3.183 0.00256 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 13.01 on 48 degrees of freedom

## Multiple R-squared: 0.1743, Adjusted R-squared: 0.1571

## F-statistic: 10.13 on 1 and 48 DF, p-value: 0.00256Iterate both models multiple times

These are one instance of simulated data. We can perform this process many times to get a sense as to how much variation there are in these estimates as well as typical values for the parameter of interest. Again, I’m going to use the simglm package to do this automatically. The code is not really that interesting here, unless you are interesting in learning R code. We will focus on the ideas from the output.

library(future)##

## Attaching package: 'future'## The following object is masked from 'package:mosaic':

##

## valueplan(multisession)

sim_args <- list(

formula = PDI ~ PDI_pre,

fixed = list(

PDI_pre = list(var_type = 'continuous',

mean = 0, sd = 17.17, floor = -38.14, ceiling = 31.86)

),

error = list(variance = 150),

sample_size = 50,

reg_weights = c(-4.33, -0.5),

replications = 1000

)

pain_data_repl <- replicate_simulation(sim_args)change_mod <- lapply(seq_along(pain_data_repl), function(xx)

broom::tidy(lm(I(PDI - PDI_pre) ~ 1, data = pain_data_repl[[xx]]))) |>

dplyr::bind_rows() |>

dplyr::mutate(model = 'change')

ancova_mod <- lapply(seq_along(pain_data_repl), function(xx)

broom::tidy(lm(PDI ~ PDI_pre, data = pain_data_repl[[xx]]))) |>

dplyr::bind_rows() |>

dplyr::mutate(model = 'ancova')

combine_data <- dplyr::bind_rows(

change_mod,

ancova_mod

)combine_data |>

dplyr::filter(term == '(Intercept)') |>

dplyr::mutate(term2 = 'Intercept') |>

gf_violin(model ~ estimate, fill = 'grey85', draw_quantiles = c(0.1, 0.5, 0.9)) |>

gf_refine(scale_x_continuous("", breaks = seq(-18, 9, 3))) |>

gf_vline(xintercept = 0, linewidth = 2, linetype = 'dotted')

Increase strength of design

A rather simple adjustment to the above design (not done in the original study) is to add a control group. The control group would not receive the treatment/intervention, but the same measurements would occur for those groups. This removes the counterfactual of having time pass. For example, adding the control group helps to answer the question about what would occur without any treatment just with the passage of time.

Having a control group does not automatically give a causal type research design. The key for those types of considerations is really in how the control group was selected. If it was done via random assignment, then this would be a causal type design due to the balanced nature of the control and treatment/intervention group at baseline or pre measurement. If random assignment was not done, then causal type claims can not be made.

Analysis of pre / post design with control group

Unlike above when there was no control group, the choice of ANCOVA and change scores can provide different results. Much of the difference is due to whether the control and treatment/intervention group are balanced at baseline. If random assignment was done and the groups are balanced (similar characteristics at baseline), then both ANCOVA and change scores should give similar results. However, if random assignment was not performed, then it is likely that there is sampling bias and the control and treatment/intervention groups would likely be non-equivalent at baseline. This would then need to be adjusted via ANCOVA. For example, if one group has a higher mean at baseline, this could mean they could be more likely to have bigger change, have regression to the mean, or be close to the ceiling for the measure.

Change Scores

\[ Post - Pre = \beta_{0} + \beta_{2} treat + \epsilon \]

ANCOVA

\[ Post = \beta_{0} + \beta_{1} Pre + \beta_{2} treat + \epsilon \]

For both models, the term of interest is \(\beta_{2}\). This term would represent the average treatment effect.

sim_args <- list(

formula = PDI ~ PDI_pre + treatment,

fixed = list(

PDI_pre = list(var_type = 'continuous',

mean = 0, sd = 17.17, floor = -38.14, ceiling = 31.86),

treatment = list(var_type = 'factor',

levels = c('control', 'treatment'))

),

error = list(variance = 150),

sample_size = 50,

reg_weights = c(0, -0.5, -10)

)

pain_data <- simulate_fixed(data = NULL, sim_args) |>

simulate_error(sim_args) |>

generate_response(sim_args)

head(pain_data)## X.Intercept. PDI_pre treatment_1 treatment level1_id error

## 1 1 17.021715 1 treatment 1 5.796060

## 2 1 17.749716 1 treatment 2 -9.253321

## 3 1 30.926273 0 control 3 19.142837

## 4 1 -6.265335 0 control 4 21.267342

## 5 1 1.444216 1 treatment 5 4.792722

## 6 1 -38.140000 1 treatment 6 4.508464

## fixed_outcome random_effects PDI

## 1 -18.510858 0 -12.714798

## 2 -18.874858 0 -28.128179

## 3 -15.463136 0 3.679701

## 4 3.132667 0 24.400009

## 5 -10.722108 0 -5.929386

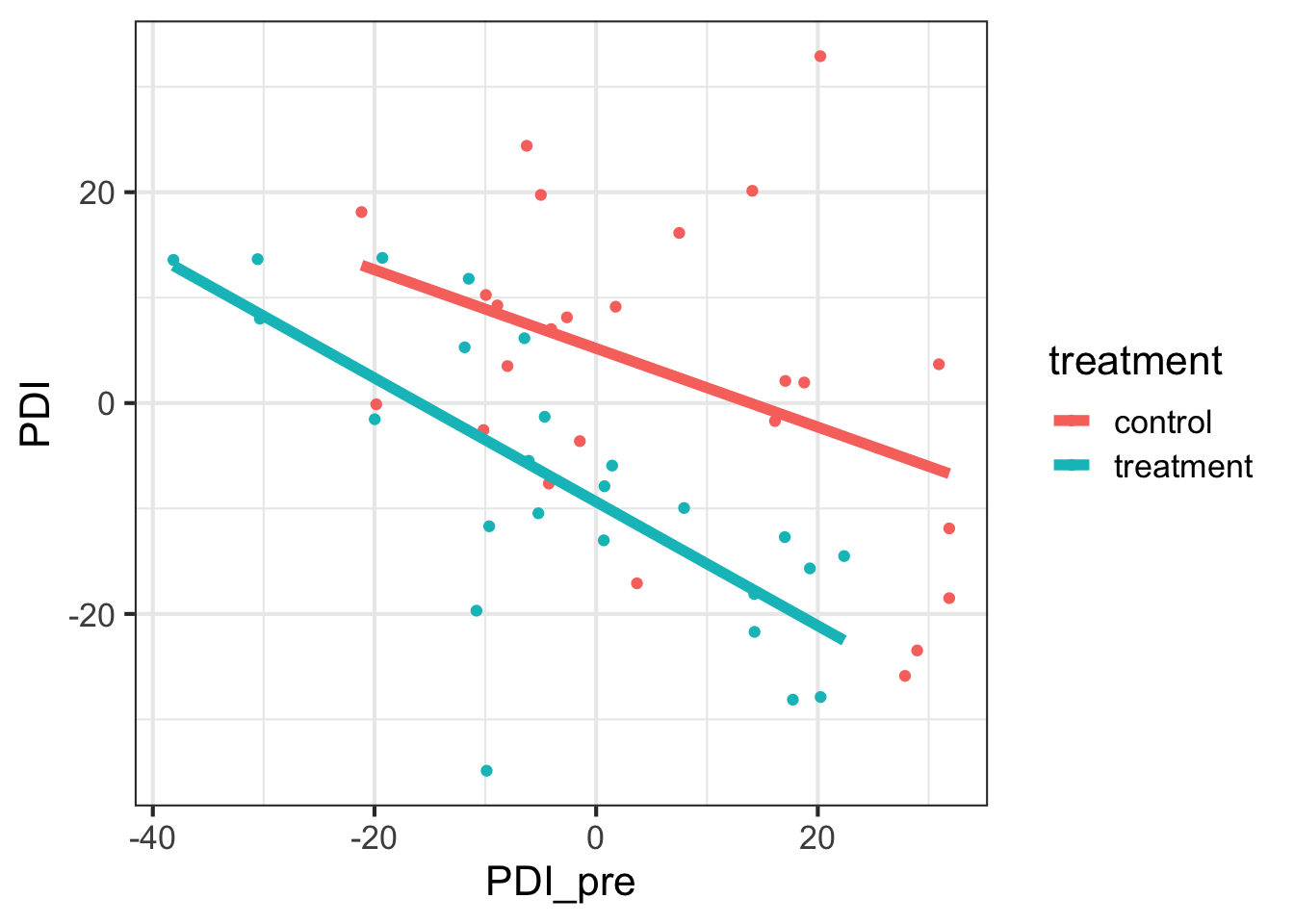

## 6 9.070000 0 13.578464gf_point(PDI ~ PDI_pre, data = pain_data, color = ~ treatment) |>

gf_smooth(method = 'lm', linewidth = 2)

df_stats(PDI ~ treatment, data = pain_data, mean, sd, min, max, length)## response treatment mean sd min max length

## 1 PDI control 2.959952 14.92032 -25.87475 32.89958 25

## 2 PDI treatment -7.531723 13.91319 -34.86905 13.76866 25df_stats(PDI_pre ~ treatment, data = pain_data, mean, sd, min, max, length)## response treatment mean sd min max length

## 1 PDI_pre control 5.959879 16.57644 -21.17164 31.86000 25

## 2 PDI_pre treatment -3.133879 16.95926 -38.14000 22.37469 25summary(lm(PDI ~ PDI_pre + treatment, data = pain_data))##

## Call:

## lm(formula = PDI ~ PDI_pre + treatment, data = pain_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -30.593 -7.022 0.827 5.290 36.827

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.8364 2.4917 2.342 0.023450 *

## PDI_pre -0.4826 0.1039 -4.646 2.75e-05 ***

## treatmenttreatment -14.8806 3.5416 -4.202 0.000117 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 12.07 on 47 degrees of freedom

## Multiple R-squared: 0.3977, Adjusted R-squared: 0.3721

## F-statistic: 15.52 on 2 and 47 DF, p-value: 6.688e-06summary(lm(I(PDI - PDI_pre) ~ treatment, data = pain_data))##

## Call:

## lm(formula = I(PDI - PDI_pre) ~ treatment, data = pain_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -50.743 -19.888 1.609 20.138 56.116

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.000 5.516 -0.544 0.589

## treatmenttreatment -1.398 7.801 -0.179 0.859

##

## Residual standard error: 27.58 on 48 degrees of freedom

## Multiple R-squared: 0.0006685, Adjusted R-squared: -0.02015

## F-statistic: 0.03211 on 1 and 48 DF, p-value: 0.8585Iterate

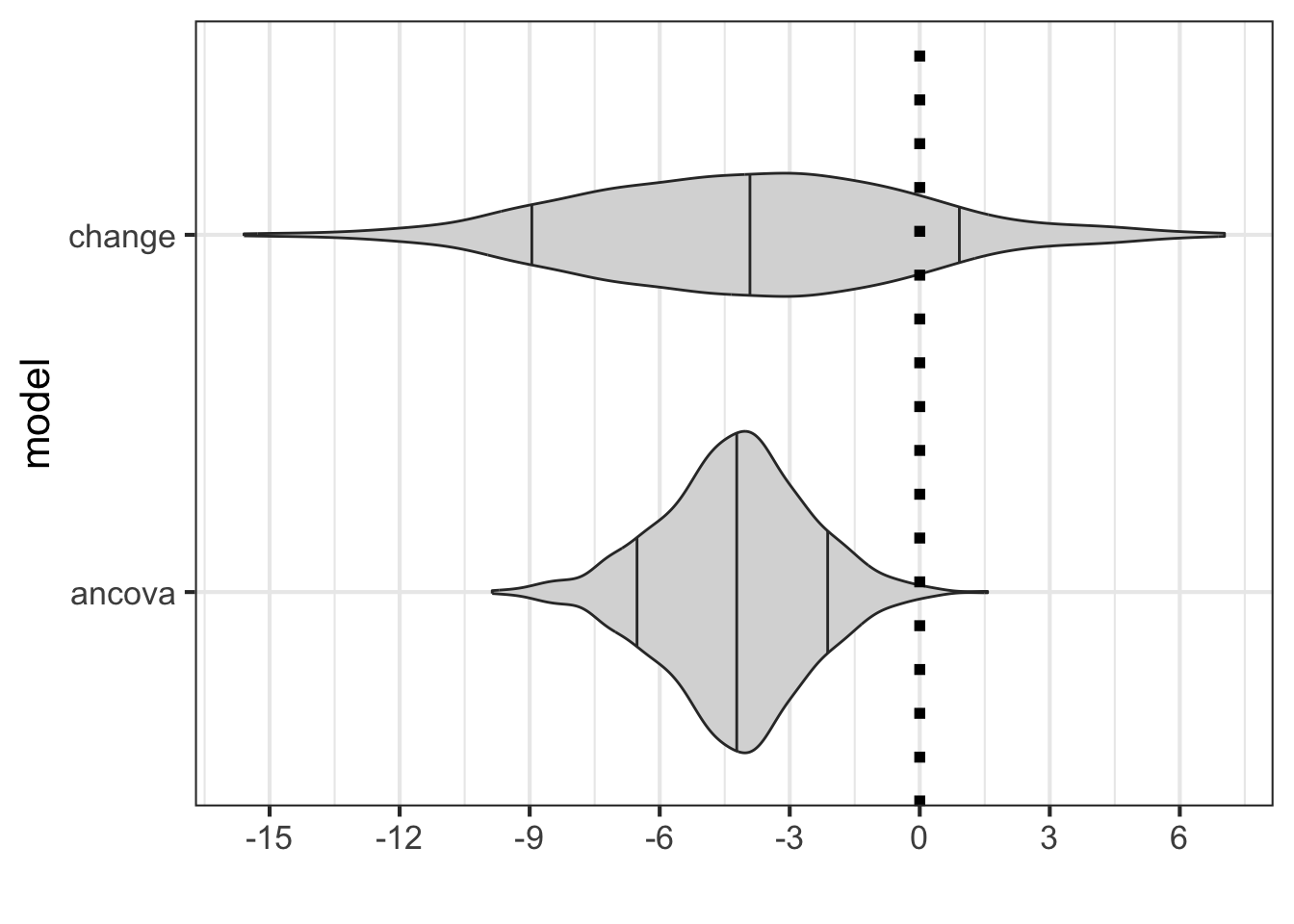

Again, let’s look at any differences by performing the simulation multiple times.

library(future)

plan(multicore)## Warning in supportsMulticoreAndRStudio(...): [ONE-TIME WARNING] Forked

## processing ('multicore') is not supported when running R from RStudio because

## it is considered unstable. For more details, how to control forked processing

## or not, and how to silence this warning in future R sessions, see

## ?parallelly::supportsMulticoresim_args <- list(

formula = PDI ~ PDI_pre + treatment,

fixed = list(

PDI_pre = list(var_type = 'continuous',

mean = 0, sd = 17.17, floor = -38.14, ceiling = 31.86),

treatment = list(var_type = 'factor',

levels = c('control', 'treatment'))

),

error = list(variance = 150),

sample_size = 50,

reg_weights = c(0, -0.5, -10),

replications = 1000

)

pain_data_repl <- replicate_simulation(sim_args)

change_mod <- lapply(seq_along(pain_data_repl), function(xx)

broom::tidy(lm(I(PDI - PDI_pre) ~ 1 + treatment, data = pain_data_repl[[xx]]))) |>

dplyr::bind_rows() |>

dplyr::mutate(model = 'change')

ancova_mod <- lapply(seq_along(pain_data_repl), function(xx)

broom::tidy(lm(PDI ~ PDI_pre + treatment, data = pain_data_repl[[xx]]))) |>

dplyr::bind_rows() |>

dplyr::mutate(model = 'ancova')

combine_data <- dplyr::bind_rows(

change_mod,

ancova_mod

)combine_data |>

dplyr::filter(term == 'treatment') |>

gf_violin(model ~ estimate, fill = 'grey85', draw_quantiles = c(0.1, 0.5, 0.9))

Non-equivalent control groups

What happens if the control group is not equivalent to the treatment group? One way this can be simulated is to have the treatment allocation not be random, but instead have the treatment allocation be dependent on the pre test score.

sim_args <- list(

formula = PDI ~ PDI_pre + treatment,

fixed = list(

PDI_pre = list(var_type = 'continuous',

mean = 0, sd = 17.17, floor = -38.14, ceiling = 31.86),

treatment = list(var_type = 'ordinal',

levels = c(0:1))

),

error = list(variance = 150),

sample_size = 50,

reg_weights = c(0, -0.5, -10),

correlate = list(fixed = data.frame(x = c('PDI_pre'),

y = c('treatment'),

corr = c(0.6)))

)

pain_data <- simulate_fixed(data = NULL, sim_args) |>

correlate_variables(sim_args) |>

simulate_error(sim_args) |>

generate_response(sim_args)

head(pain_data)## X.Intercept. PDI_pre_old treatment_old level1_id PDI_pre treatment_corr

## 1 1 -38.140000 0 1 38.132395 1.6432384

## 2 1 -20.175242 0 2 20.167638 1.3270077

## 3 1 -19.148405 0 3 19.140801 1.3089325

## 4 1 -37.993076 0 4 37.985471 1.6406521

## 5 1 -24.266680 1 5 24.273153 0.5992763

## 6 1 -5.568467 0 6 5.560865 1.0698870

## treatment error fixed_outcome random_effects PDI

## 1 1 12.142795 -29.06620 0 -16.923402

## 2 1 1.872014 -20.08382 0 -18.211805

## 3 1 -3.643485 -19.57040 0 -23.213885

## 4 1 -8.480236 -28.99274 0 -37.472971

## 5 1 -15.550021 -22.13658 0 -37.686597

## 6 1 8.048826 -12.78043 0 -4.731607df_stats(PDI_pre ~ treatment, data = pain_data, mean, median, sd, length)## response treatment mean median sd length

## 1 PDI_pre 0 -5.793162 -4.779870 14.35516 21

## 2 PDI_pre 1 8.734501 7.060418 19.94657 29df_stats(PDI_pre ~ treatment_old, data = pain_data, mean, median, sd, length)## response treatment_old mean median sd length

## 1 PDI_pre 0 3.783804 -0.9768078 19.32784 23

## 2 PDI_pre 1 1.652468 4.6230295 19.17271 27summary(lm(PDI ~ PDI_pre + treatment, data = pain_data))##

## Call:

## lm(formula = PDI ~ PDI_pre + treatment, data = pain_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -24.9430 -6.4403 -0.7748 9.8293 22.9479

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.05899 2.62678 0.784 0.43706

## PDI_pre -0.49700 0.09526 -5.217 4.02e-06 ***

## treatment -14.54456 3.64510 -3.990 0.00023 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 11.77 on 47 degrees of freedom

## Multiple R-squared: 0.5944, Adjusted R-squared: 0.5771

## F-statistic: 34.44 on 2 and 47 DF, p-value: 6.172e-10summary(lm(I(PDI - PDI_pre) ~ treatment, data = pain_data))##

## Call:

## lm(formula = I(PDI - PDI_pre) ~ treatment, data = pain_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -49.897 -22.862 -3.191 22.363 58.270

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 10.731 6.355 1.689 0.0978 .

## treatment -36.293 8.345 -4.349 7.09e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 29.12 on 48 degrees of freedom

## Multiple R-squared: 0.2827, Adjusted R-squared: 0.2677

## F-statistic: 18.92 on 1 and 48 DF, p-value: 7.092e-05library(future)

plan(multicore)

sim_args <- list(

formula = PDI ~ PDI_pre + treatment,

fixed = list(

PDI_pre = list(var_type = 'continuous',

mean = 0, sd = 17.17, floor = -38.14, ceiling = 31.86),

treatment = list(var_type = 'ordinal',

levels = c(0:1))

),

error = list(variance = 150),

sample_size = 50,

reg_weights = c(0, -0.5, -10),

correlate = list(fixed = data.frame(x = c('PDI_pre'),

y = c('treatment'),

corr = c(0.6))),

replications = 1000

)

pain_data_repl <- replicate_simulation(sim_args)

change_mod <- lapply(seq_along(pain_data_repl), function(xx)

broom::tidy(lm(I(PDI - PDI_pre) ~ 1 + treatment, data = pain_data_repl[[xx]]))) |>

dplyr::bind_rows() |>

dplyr::mutate(model = 'change')

ancova_mod <- lapply(seq_along(pain_data_repl), function(xx)

broom::tidy(lm(PDI ~ PDI_pre + treatment, data = pain_data_repl[[xx]]))) |>

dplyr::bind_rows() |>

dplyr::mutate(model = 'ancova')

combine_data <- dplyr::bind_rows(

change_mod,

ancova_mod

)combine_data |>

dplyr::filter(term == 'treatment') |>

gf_violin(model ~ estimate, fill = 'grey85', draw_quantiles = c(0.1, 0.5, 0.9)) |>

gf_refine(scale_x_continuous("", breaks = seq(-55, 5, 5)))

library(future)

plan(multicore)

sim_args <- list(

formula = PDI ~ PDI_pre + treatment,

fixed = list(

PDI_pre = list(var_type = 'continuous',

mean = 0, sd = 17.17, floor = -38.14, ceiling = 31.86),

treatment = list(var_type = 'ordinal',

levels = c(0:1))

),

error = list(variance = 150),

sample_size = 50,

reg_weights = c(0, -0.5, -10),

correlate = list(fixed = data.frame(x = c('PDI_pre'),

y = c('treatment'),

corr = c(-0.4))),

replications = 1000

)

pain_data_repl <- replicate_simulation(sim_args)

change_mod <- lapply(seq_along(pain_data_repl), function(xx)

broom::tidy(lm(I(PDI - PDI_pre) ~ 1 + treatment, data = pain_data_repl[[xx]]))) |>

dplyr::bind_rows() |>

dplyr::mutate(model = 'change')

ancova_mod <- lapply(seq_along(pain_data_repl), function(xx)

broom::tidy(lm(PDI ~ PDI_pre + treatment, data = pain_data_repl[[xx]]))) |>

dplyr::bind_rows() |>

dplyr::mutate(model = 'ancova')

combine_data <- dplyr::bind_rows(

change_mod,

ancova_mod

)

combine_data |>

dplyr::filter(term == 'treatment') |>

gf_violin(model ~ estimate, fill = 'grey85', draw_quantiles = c(0.1, 0.5, 0.9)) |>

gf_refine(scale_x_continuous("", breaks = seq(-35, 45, 5)))