Linear Regression Inference

Inference for regression parameters

It is often of interest to perform inference about the regression parameters that we have estimated thus far. The reason inference is useful is based on the idea that for most problems the sample is used to approximate the population. Therefore, a subset of the population (the sample) is used to estimate the population parameters that are of most interest. As such, our estimates come with error and this uncertainty we can quantify when making inference about the parameter estimates.

In this course, I plan to show you two ways to perform this inference. One of those frameworks will be the classical approach which uses classical statistical theory to estimate the amount of uncertainty in the parameter estimates. The second approach will use the bootstrap as a way to computationally estimate the uncertainty. The benefit of the bootstrap is that it comes with fewer assumptions than the classical approach. We will build up to these arguments.

Classical Inferential Framework

The classical inferential framework, sometimes referred to as the null hypothesis significance test (NHST) has been around for more than 100 years. This framework builds off of the idea of a null hypothesis.

A null hypothesis is typically thought as a hypothesis that assumes there is no relationship or a null effect. Framing this in the regression concept that we have been working with, we could define the following null hypotheses.

\[ H_{0}: \beta_{0} = 0.\ The\ population\ yintercept\ equals\ 0. \]

or

\[ H_{0}: \beta_{1} = 0.\ The\ population\ slope\ equals\ 0. \]

In the following two null hypotheses, represented with \(H_{0}\), the population parameters are being assumed to be 0 in the population. This is one definition of a null effect, but is not the only null effect we can define (more on this later). The value defined as a null effect is important as it centers the sampling distribution used for evaluating where the sample estimate falls in that distribution.

Another hypothesis is typically defined with the null hypothesis, called the alternative hypothesis. This hypothesis states that there is an effect. Within the linear regression framework, we could write the alternative hypotheses as:

\[ H_{A}: \beta_{0} \neq 0.\ The\ population\ yintercept\ is\ not\ equal\ to\ 0. \]

or

\[ H_{A}: \beta_{1} \neq 0.\ The\ population\ slope\ is\ not\ equal\ to\ 0. \]

In the following two alternative hypotheses, represented with \(H_{A}\), the population parameters are assumed to be not equal to 0. These can also be one-sided, more on this with an example later.

Estimating uncertainty in parameter estimates

The standard error is used to estimate uncertainty or error in the parameter estimates due to having a sample from the population. More specifically, this means that the entire population is not used to estimate the parameter, therefore the estimate we have is very likely not equal exactly to the parameter. Instead, there is some sort of sampling error involved that we want to quantify. If the sample was collected well, ideally randomly, then the estimate should be unbiased. Unbiased here doesn’t mean that the estimate equals the population parameter, rather, that through repeated sampling, the average of our sample estimates would equal the population parameter.

As mentioned, standard errors are used to quantify this uncertainty. In the linear regression case we have explored so far, there are mathematical formula for the standard errors. These are shown below.

\[ SE\left( \hat{\beta}_{0} \right) = \sqrt{\hat{\sigma}^2 \left( \frac{1}{n} + \frac{\bar{X}^2}{\sum \left( X - \bar{X} \right)^2} \right)} \]

and

\[ SE\left( \hat{\beta}_{1} \right) = \sqrt{\frac{\hat{\sigma}^2}{\sum \left( X - \bar{X} \right)^2}} \]

In the equation above, \(\hat{\sigma}^2\), is equal to \(\sqrt{\frac{SS_{error}}{n - 2}}\), and \(n\) is the sample size (ie, number of rows of data in the model).

Matrix Representation

It is also possible, and more easily extendable, to write the standard error computations or the variance of the estimated parameters in matrix representation. This framework extends beyond the single predictor case (ie. one \(X\)), therefore is more readily used in practice.

\[ \hat{var}\left({\hat{\beta}}\right) = \hat{\sigma}^2 \left( \mathbf{X}^{`}\mathbf{X} \right)^{-1} \]

In the equation above, \(\hat{\sigma}^2\), is equal to \(\sqrt{\frac{SS_{error}}{n - 2}}\), and \(\mathbf{X}\) is the design matrix from the regression analysis. Finally, to get the standard errors back, you take the square root of the diagonal elements.

$$ SE( ) =

Data

The data for this section of notes will explore data from the Environmental Protection Agency on Air Quality collected for the state of Iowa in 2021. The data are daily values for PM 2.5 particulates. The attributes included in the data are shown below with a short description.

| Variable | Description |

|---|---|

| date | Date of observation |

| id | Site ID |

| poc | Parameter Occurrence Code (POC) |

| pm2.5 | Average daily pm 2.5 particulate value, in (ug/m3; micrograms per meter cubed) |

| daily_aqi | Average air quality index |

| site_name | Site Name |

| aqs_parameter_desc | Text Description of Observation |

| cbsa_code | Core Based Statistical Area (CBSA) ID |

| cbsa_name | CBSA Name |

| county | County in Iowa |

| avg_wind | Average daily wind speed (in knots) |

| max_wind | Maximum daily wind speed (in knots) |

| max_wind_hours | Time of maximum daily wind speed |

Guiding Question

How is average daily wind speed related to the daily air quality index?

library(tidyverse)## Warning: package 'ggplot2' was built under R version 4.3.2## Warning: package 'tidyr' was built under R version 4.3.2## ── Attaching core tidyverse packages ──────────────────────── tidyverse 2.0.0 ──

## ✔ dplyr 1.1.4 ✔ readr 2.1.5

## ✔ forcats 1.0.0 ✔ stringr 1.5.1

## ✔ ggplot2 3.5.0 ✔ tibble 3.2.1

## ✔ lubridate 1.9.3 ✔ tidyr 1.3.1

## ✔ purrr 1.0.2

## ── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

## ✖ dplyr::filter() masks stats::filter()

## ✖ dplyr::lag() masks stats::lag()

## ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errorslibrary(ggformula)## Loading required package: scales

##

## Attaching package: 'scales'

##

## The following object is masked from 'package:purrr':

##

## discard

##

## The following object is masked from 'package:readr':

##

## col_factor

##

## Loading required package: ggridges## Warning: package 'ggridges' was built under R version 4.3.2##

## New to ggformula? Try the tutorials:

## learnr::run_tutorial("introduction", package = "ggformula")

## learnr::run_tutorial("refining", package = "ggformula")library(mosaic)## Warning: package 'mosaic' was built under R version 4.3.2## Registered S3 method overwritten by 'mosaic':

## method from

## fortify.SpatialPolygonsDataFrame ggplot2

##

## The 'mosaic' package masks several functions from core packages in order to add

## additional features. The original behavior of these functions should not be affected by this.

##

## Attaching package: 'mosaic'

##

## The following object is masked from 'package:Matrix':

##

## mean

##

## The following object is masked from 'package:scales':

##

## rescale

##

## The following objects are masked from 'package:dplyr':

##

## count, do, tally

##

## The following object is masked from 'package:purrr':

##

## cross

##

## The following object is masked from 'package:ggplot2':

##

## stat

##

## The following objects are masked from 'package:stats':

##

## binom.test, cor, cor.test, cov, fivenum, IQR, median, prop.test,

## quantile, sd, t.test, var

##

## The following objects are masked from 'package:base':

##

## max, mean, min, prod, range, sample, sumtheme_set(theme_bw(base_size = 18))

airquality <- readr::read_csv("https://raw.githubusercontent.com/lebebr01/psqf_6243/main/data/iowa_air_quality_2021.csv")## Rows: 6917 Columns: 10

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (5): date, site_name, aqs_parameter_desc, cbsa_name, county

## dbl (5): id, poc, pm2.5, daily_aqi, cbsa_code

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.wind <- readr::read_csv("https://raw.githubusercontent.com/lebebr01/psqf_6243/main/data/daily_WIND_2021-iowa.csv")## Rows: 1537 Columns: 5

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (2): date, cbsa_name

## dbl (3): avg_wind, max_wind, max_wind_hours

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.airquality <- airquality |>

left_join(wind, by = c('cbsa_name', 'date')) |>

drop_na()## Warning in left_join(airquality, wind, by = c("cbsa_name", "date")): Detected an unexpected many-to-many relationship between `x` and `y`.

## ℹ Row 21 of `x` matches multiple rows in `y`.

## ℹ Row 1 of `y` matches multiple rows in `x`.

## ℹ If a many-to-many relationship is expected, set `relationship =

## "many-to-many"` to silence this warning.air_lm <- lm(daily_aqi ~ avg_wind, data = airquality)

coef(air_lm)## (Intercept) avg_wind

## 48.222946 -2.211798summary(air_lm)$r.square## [1] 0.08528019summary(air_lm)$sigma## [1] 18.05479sum_x <- airquality |>

summarise(mean_wind = mean(avg_wind),

sum_dev_x_sq = sum( (avg_wind - mean_wind) ^ 2))

sum_x## # A tibble: 1 × 2

## mean_wind sum_dev_x_sq

## <dbl> <dbl>

## 1 4.27 29937.se_b0 <- sqrt(summary(air_lm)$sigma^2 * ((1 / nrow(airquality)) + ( sum_x[['mean_wind']]^2 / sum_x[['sum_dev_x_sq']]) ))

se_b1 <- sqrt(summary(air_lm)$sigma^2 / sum_x[['sum_dev_x_sq']])

se_b0## [1] 0.51551se_b1## [1] 0.1043487X <- model.matrix(air_lm)

var_b <- summary(air_lm)$sigma^2 * solve(t(X) %*% X)

var_b ## (Intercept) avg_wind

## (Intercept) 0.26575054 -0.04644803

## avg_wind -0.04644803 0.01088865sqrt(diag(var_b))## (Intercept) avg_wind

## 0.5155100 0.1043487summary(air_lm)$coefficients[,2]## (Intercept) avg_wind

## 0.5155100 0.1043487Moving toward inference

Now that there the parameters are estimated and the amount of uncertainty is quantified, inference is possible. There are two related pieces that can be computed now, a confidence interval and/or the test-statistic and p-value. Let’s go through both.

First, a confidence interval can be computed. Confidence intervals take the following general form:

\[ \hat{\beta} \pm C * SE \]

Where, \(\hat{\beta}\) is the parameter estimate, \(C\) is the confidence level, and \(SE\) is the standard error. The parameter estimates and standard errors are what we have already established, the \(C\) is the confidence level. This indicates the percentage of times, over the long run/repeated sampling, that the interval will capture the population parameter. Historically, this value is often specified as 95%, but any value is theoretically possible.

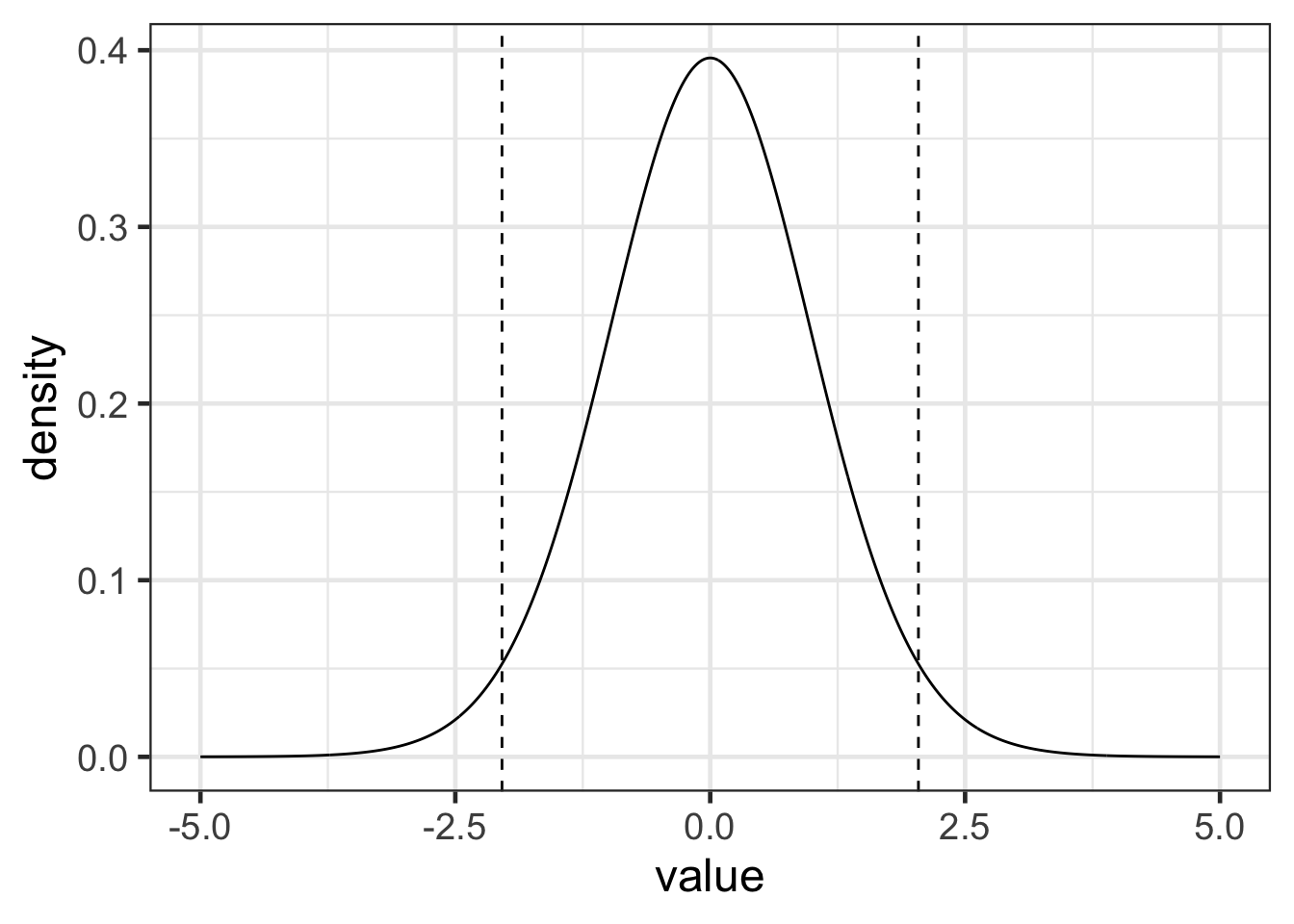

The \(C\) value represents a quantile from a mathematical distribution that separates the middle percetage desired (ie, 95%) from the rest of the distribution. The mathematical distribution is most often the t-distribution, but the difference between a t-distribution and normal distribution are modest once the sample size is greater than 30 or so.

The figure below tries to highlight the \(C\) value.

t_30 <- data.frame(value = seq(-5, 5, .01), density = dt(seq(-5, 5, .01), df = 30))

gf_line(density ~ value, data = t_30) |>

gf_vline(xintercept = ~ qt(.025, df = 30), linetype = 2) |>

gf_vline(xintercept = ~ qt(.975, df = 30), linetype = 2)

coef(air_lm)## (Intercept) avg_wind

## 48.222946 -2.211798summary(air_lm)$coefficients[,2]## (Intercept) avg_wind

## 0.5155100 0.1043487abs(qt(.025, df = nrow(airquality) -2))## [1] 1.96045648.2 + c(-1, 1) * 1.96 * .5155## [1] 47.18962 49.21038-2.211 + c(-1, 1) * 1.96 * .1043## [1] -2.415428 -2.006572Inference with test statistics

It is also possible to do inference with a test statistic and computation of a p-value. Inference in this framework can be summarized into the following steps:

- Generate hypotheses, ie null and alternative hypotheses.

- Establish an \(\alpha\) value

- Estimate parameters

- Compute test statistic

- Generate p-value

An \(\alpha\) value is the level of significance and represents the probability of obtaining the results due to chance. This is a value that the researcher can select. For a 5% \(\alpha\) value, this is what was used above to compute the confidence intervals.

The test statistic is computed as follows:

\[ test\ stat = \frac{\hat{\beta} - hypothesized\ value}{SE(\hat{\beta})} \]

where \(\hat{\beta}\) is the estimated parameter, \(SE(\hat{\beta})\) is the standard error of the parameter estimate, and the \(hypothesized\ value\) is the hypothesized value from the null hypothesis. This is often 0, but does not need to be zero.

Let’s assume the following null/alternative hypotheses:

\[ H_{0}: \beta_{1} = 0 \\ H_{A}: \beta_{1} \neq 0 \]

Let’s use R to compute this test statistic.

t = (-2.211 - 0) / .1043

t |> round(3)## [1] -21.198pt(-21.198, df = nrow(airquality) -2, lower.tail = TRUE) |>

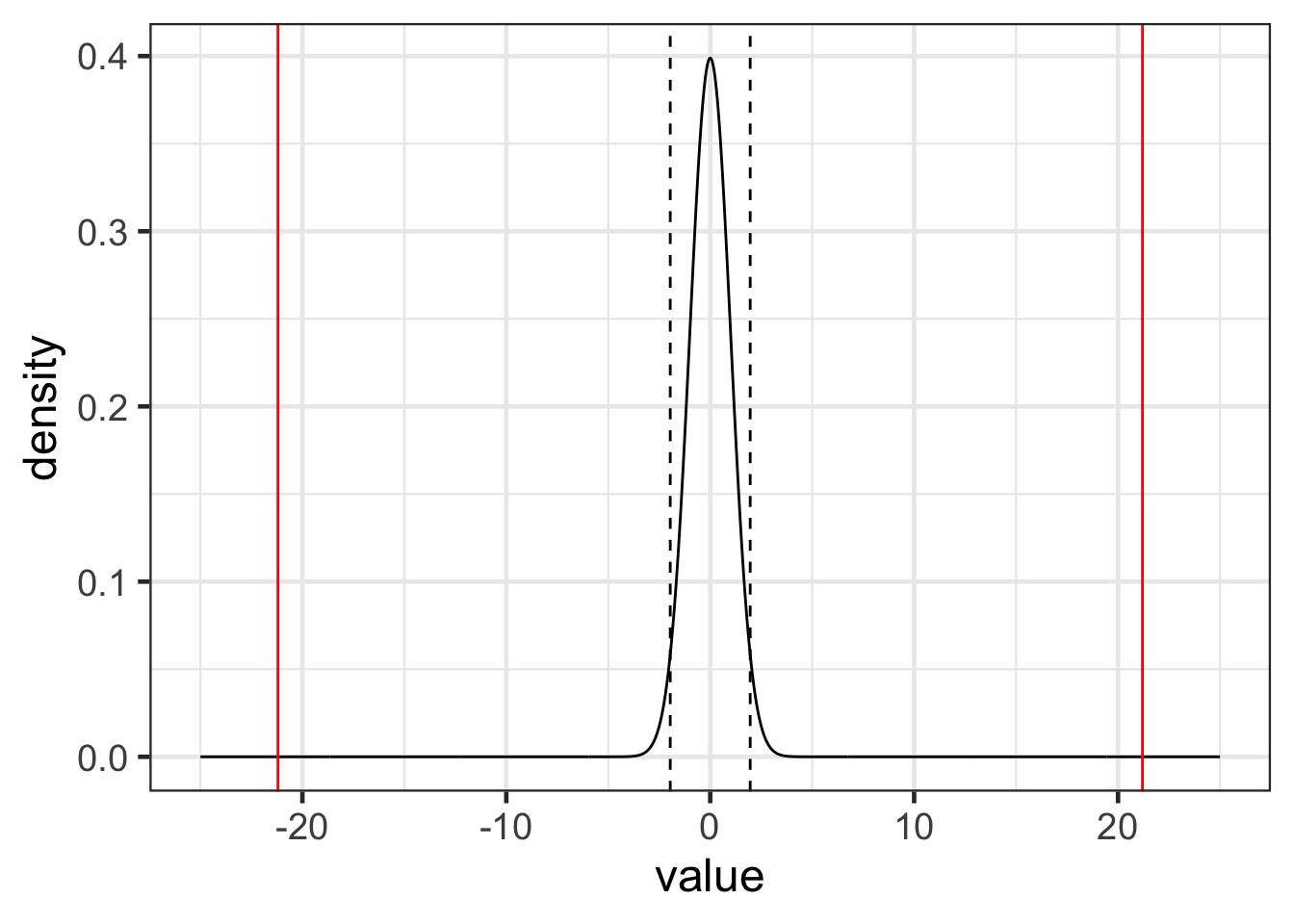

round(3)## [1] 0t_dist <- data.frame(value = seq(-25, 25, .05), density = dt(seq(-25, 25, .05), df = (nrow(airquality) - 2)))

gf_line(density ~ value, data = t_dist) |>

gf_vline(xintercept = ~ qt(.025, df = (nrow(airquality) - 2)), linetype = 2) |>

gf_vline(xintercept = ~ qt(.975, df = (nrow(airquality) - 2)), linetype = 2) |>

gf_vline(xintercept = ~ -21.198, color = 'red') |>

gf_vline(xintercept = ~ 21.198, color = 'red')

summary(air_lm)##

## Call:

## lm(formula = daily_aqi ~ avg_wind, data = airquality)

##

## Residuals:

## Min 1Q Median 3Q Max

## -41.71 -14.38 -0.73 12.43 86.84

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 48.2229 0.5155 93.54 <2e-16 ***

## avg_wind -2.2118 0.1043 -21.20 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 18.05 on 4819 degrees of freedom

## Multiple R-squared: 0.08528, Adjusted R-squared: 0.08509

## F-statistic: 449.3 on 1 and 4819 DF, p-value: < 2.2e-16confint(air_lm)## 2.5 % 97.5 %

## (Intercept) 47.212311 49.233581

## avg_wind -2.416369 -2.007227confint(air_lm, level = .5)## 25 % 75 %

## (Intercept) 47.875214 48.57068

## avg_wind -2.282185 -2.14141